Part I: Seductive Futures

1. Solar Cells and Other Fairy TalesOnce upon a time, a pair of researchers led a group of study participants into a laboratory overlooking the ocean, gave them free unlimited coffee, and assigned them one simple task. The researchers spread out an assortment of magazine clippings and requested that participants assemble them into collages depicting what they thought of energy and its possible future.1 No cost-benefit analyses, no calculations, no research, just glue sticks and scissors. They went to work. Their resulting collages were telling—not for what they contained, but for what they didn’t.

They didn’t dwell on energy-efficient lighting, walkable communities, or suburban sprawl. They didn’t address population, consumption, or capitalism. They instead pasted together images of wind turbines, solar cells, biofuels, and electric cars. When they couldn’t find clippings, they asked to sketch. Dams, tidal and wave-power systems, even animal power. They eagerly cobbled together fantastic totems to a gleaming future of power production. As a society, we have done the same.

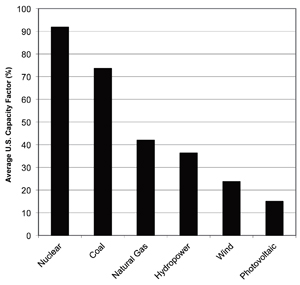

The seductive tales of wind turbines, solar cells, and biofuels foster the impression that with a few technical upgrades, we might just sustain our current energy trajectories (or close to it) without consequence. Media and political coverage lull us into dreams of a clean energy future juxtaposed against a tumultuous past characterized by evil oil companies and the associated energy woes they propagated. Like most fairy tales, this productivist parable contains a tiny bit of truth. And a whole lot of fantasy.

Act II should warn you in advance; this book has a happy ending, but the joust in this first chapter might not. Even so, let’s first take a moment to consider the promising allure of solar cells. Throughout the diverse disciplines of business, politics, science, academia, and environmentalism, solar cells stand tall as a valuable technology that everyone agrees is worthy of advancement. We find plenty of support for solar cells voiced by:

Politicians,

If we take on special interests, and make aggressive investments in clean and renewable energy, like Google’s done with solar here in Mountain View, then we can end our addiction to oil, create millions of jobs and save the planet in the bargain.

-– Barack Obama

Textbooks,

Photovoltaic power generation is reliable, involves no moving parts, and the operation and maintenance costs are very low. The operation of a photovoltaic system is silent, and creates no atmospheric pollution. . . . Power can be generated where it is required without the need for transmission lines. . . . Other innovative solutions such as photovoltaic modules integrated in the fabric of buildings reduce the marginal cost of photovoltaic energy to a minimum. The economic comparison with conventional energy sources is certain to receive a further boost as the environmental and social costs of power generation are included fully in the picture.

–- From the textbook, Solar Electricity

Environmentalists,

Solar power is a proven and cost-effective alternative to fossil fuels and an important part of the solution to end global warming. The sun showers the earth with more usable energy in one minute than people use globally in one year.

–- Greenpeace

And even oil companies,

Solar solutions provide clean, renewable energy that save you money.

–- BP2

We ordinarily encounter the dissimilar views of these groups bound up in a tangle of conflict, but solar energy forms a smooth ground of commonality where environmentalists, corporations, politicians, and scientists can all agree. The notion of solar energy is flexible enough to allow diverse interest groups to take up solar energy for their own uses: corporations crown themselves with halos of solar cells to cast a green hue on their products, politicians evoke solar cells to garner votes, and scientists recognize solar cells as a promising well of research funding. It’s in everyone’s best interest to broadcast the advantages of solar energy. And they do. Here are the benefits they associate with solar photovoltaic technology:

• CO2 reduction: Even if solar cells are expensive now, they’re worth the cost to avoid the more severe dangers of climate change.

• Simplicity: Once installed, solar panels are silent, reliable, and virtually maintenance free.

• Cost: Solar costs are rapidly decreasing.

• Economies of scale: Mass production of solar cells will lead to cheaper panels.

• Learning by doing: Experience gained from installing solar systems will lead to further cost reductions.

• Durability: Solar cells last an extremely long time.

• Local energy: Solar cells reduce the need for expensive power lines, transformers, and related transmission infrastructure.3

Where the Internet EndsAll of these benefits seem reasonable, if not downright encouraging; it’s difficult to see why anyone would want to argue with them. Over the past half century, journalists, authors, politicians, corporations, environmentalists, scientists, and others have eagerly ushered a fantasmatic array of solar devices into the spotlight, reported on their spectacular journeys into space, featured their dedicated entrepreneurs and inventors, celebrated their triumphs over dirty fossil fuels, and dared to envisage a glorious solar future for humanity.

The sheer magnitude of literature on the subject overwhelms— not just in newspapers, magazines, and books, but also in scientific literature, government documents, corporate materials, and environmental reports—far, far too much to sift through. The various tributes to solar cells could easily fill a library; the critiques would scarcely fill a book bag.

When I searched for critical literature on photovoltaics, Google returned numerous “no results found” errors—an error I’d never seen (or even realized existed) until I began querying for published drawbacks of solar energy. Bumping into the end of the Internet typically requires an especially arduous expedition into the darkest recesses of human knowledge, yet searching for drawbacks of solar cells can deliver you in a click. Few writers dare criticize solar cells, which understandably leads us to presume this sunny resource doesn’t present serious limitations and leaves us clueless as to why nations can’t seem to deploy solar cells on a grander scale. Though if we put on our detective caps and pull out our flashlights, we might just find some explanations lurking in the shadows—perhaps in the most unlikely of places.

Photovoltaics in Sixty Seconds or LessHistorians of technology track solar cells back to 1839 and credit Alexandre-Edmond Becquerel for discovering that certain lightinduced chemical reactions produce electrical currents. This remained primarily an intellectual curiosity until 1940, when solidstate diodes emerged to form a foundation for modern silicon solar cells. The first solar cells premiered just eighteen years later, aboard the U.S. Navy’s Vanguard 1 satellite.4

Today manufacturers construct solar cells using techniques and materials from the microelectronics industry. They spread layers of p-type silicon and n-type silicon onto substrates. When sunlight hits this silicon sandwich, electricity flows. Brighter sunlight yields more electrical output, so engineers sometimes incorporate mirrors into the design, which capture and direct more light toward the panels. Newer thin-film technologies employ less of the expensive silicon materials. Researchers are advancing organic, polymer, nanodot, and many other solar cell technologies.5 Patent activity in these fields is rising.

Despite being around us for so long, solar technologies have largely managed to evade criticism. Nevertheless, there is now more revealing research to draw upon—not from Big Oil and climate change skeptics—but from the very government offices, environmentalists, and scientists promoting solar photovoltaics. I’ll draw primarily from this body of research as we move on.

Powering the Planet with PhotovoltaicsWhen I give presentations on alternative energy, among the most common questions philanthropists, students, and environmentalists ask is, “Why can’t we get our act together and invest in solar cells on a scale that could really create an impact?” It is a reasonable question, and it deserves a reasonable explanation.

Countless articles and books contain a statistic reading something like this: Just a fraction of some-part-of-the-planet would provide all of the earth’s power if we simply installed solar cells there. For instance, environmentalist Lester Brown, president of the Earth Policy Institute, indicates that it is “widely known within the energy community that there is enough solar energy reaching the earth each hour to power the world economy for one year.”6 Even Brown’s nemesis, skeptical environmentalist Bjorn Lomborg claims that “we could produce the entire energy consumption of the world with present-day solar cell technology placed on just 2.6 percent of the Sahara Desert.”7 Journalists, CEOs, and environmental leaders widely disseminate variations of this statistic by repeating it almost ritualistically in a mantra honoring the monumental promise of solar photovoltaic technologies. The problem with this statistic is not that it is flatly false, but that it is somewhat true.

“Somewhat true” might not seem adequate for making public policy decisions, but it has been enough to propel this statistic, shiny teeth and all, into the limelight of government studies, textbooks, official reports, environmental statements, and into the psyches of millions of people. It has become an especially powerful rhetorical device despite its misleading flaw. While it’s certainly accurate to state that the quantity of solar energy hitting that small part of the desert is equivalent to the amount of energy we consume, it does not follow that we can harness it, an extension many solar promoters explicitly or implicitly assume when they repeat the statistic. Similarly, any physicist can explain how a single twenty-five-cent quarter contains enough energy bound up in its atoms to power the entire earth, but since we have no way of accessing these forces, the quarter remains a humble coin rather than a solution to our energy needs. The same limitation holds when considering solar energy.

Skeptical? I was too. And we’ll come to that. But first, let’s establish how much it might actually cost to build a solar array capable of powering the planet with today’s technology (saying nothing yet about the potential for future cost reductions). By comparing global energy consumption with the most rosy photovoltaic cost estimates, courtesy of solar proponents themselves, we can roughly sketch a total expense. The solar cells would cost about $59 trillion; the mining, processing, and manufacturing facilities to build them would cost about $44 trillion; and the batteries to store power for evening use would cost $20 trillion; bringing the total to about $123 trillion plus about $694 billion per year for maintenance.8 Keep in mind that the entire gross domestic product (gdp) of the United States, which includes all food, rent, industrial investments, government expenditures, military purchasing, exports, and so on, is only about $14 trillion. This means that if every American were to go without food, shelter, protection, and everything else while working hard every day, naked, we might just be able to build a photovoltaic array to power the planet in about a decade. But, unfortunately, these estimations are optimistic.

If actual installed costs for solar projects in California are any guide, a global solar program would cost roughly $1.4 quadrillion, about one hundred times the United States gdp.9 Mining, smelting, processing, shipping, and fabricating the panels and their associated hardware would yield about 149,100 megatons of co2.10 And everyone would have to move to the desert, otherwise transmission losses would make the plan unworkable.

That said, few solar cell proponents believe that nations ought to rely exclusively on solar cells. They typically envision an alternative energy future with an assortment of energy sources— wind, biofuels, tidal and wave power, and others. Still, calculating the total bill for solar brings up some critical questions. Could manufacturing and installing photovoltaic arrays with today’s technology on any scale be equally absurd? Does it just not seem as bad when we are throwing away a few billion dollars at a time? Perhaps. Or perhaps none of this will really matter since photovoltaic costs are dropping so quickly.

Price CheckKathy Loftus, the executive in charge of energy initiatives at Whole Foods Market, can appreciate the high costs of solar cells today, but she is optimistic about the future: “We’re hoping that our purchases along with some other retailers will help bring the technology costs down.”11 Solar proponents share her enthusiasm. The Earth Policy Institute claims solar electricity costs are “falling fast due to economies of scale as rising demand drives industry expansion.”12 The Worldwatch Institute agrees, claiming that “analysts and industry leaders alike expect continued price reductions in the near future through further economies of scale and increased optimization in assembly and installation.”13

At first glance, this is great news; if solar cell costs are dropping so quickly then it may not be long before we can actually afford to clad the planet with them. There is little disagreement among economists that manufacturing ever larger quantities of solar cells results in noticeable economies of scale. Although it’s not as apparent whether they believe these cost reductions are particularly significant in the larger scheme of things. They cite several reasons.

First, it is precarious to assume that the solar industry will realize substantial quantities of scale before solar cells become cost competitive with other forms of energy production. Solar photovoltaic investments have historically been tossed about indiscriminately like a small raft in the larger sea of the general economy. Expensive solar photovoltaic installations gain popularity during periods of high oil costs, but are often the first line items legislators cut when oil becomes cheaper again. For instance, during the oil shock of the 1970s, politicians held up solar cells as a solution, only to toss them aside once the oil price tide subsided. More recent economic turmoil forced Duke Energy to slash $50 million from its solar budget, BP cut its photovoltaic production capacity, and Solyndra filed for Chapter 11 bankruptcy.14 Economists argue that it’s difficult to achieve significant economies of scale in an industry with such violent swings between investment and divestment.

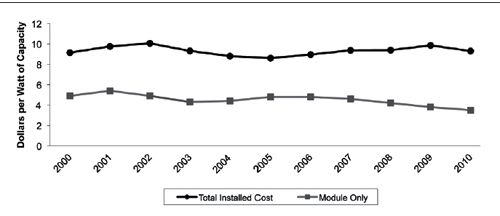

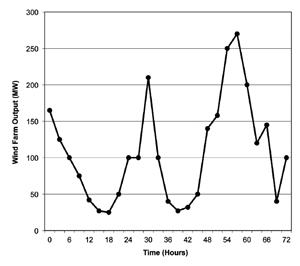

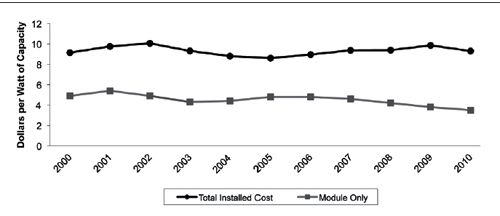

Figure 1: California solar system costs Installed photovoltaic system costs in California remain high due to a variety of expenses that are not technically determined. (Data from California Energy Commission and Solarbuzz)

Figure 1: California solar system costs Installed photovoltaic system costs in California remain high due to a variety of expenses that are not technically determined. (Data from California Energy Commission and Solarbuzz)Second, solar advocates underscore dramatic photovoltaic cost reductions since the 1960s, leaving an impression that the chart of solar cell prices is shaped like a sharply downward-tilted arrow. But according to the solar industry, prices from the most recent decade have flattened out. Between 2004 and 2009, the installed cost of solar photovoltaic modules actually increased—only when the financial crisis swung into full motion over subsequent years did prices soften. So is this just a bump in the downward-pointing arrow? Probably. However, even if solar cells become markedly cheaper, the drop may not generate much impact since photovoltaic panels themselves account for less than half the cost of an installed solar system, according to the industry.15 Based on research by solar energy proponents and field data from the California Energy Commission (one of the largest clearinghouses of experience-based solar cell data), cheaper photovoltaics won’t offset escalating expenditures for insurance, warranty expenses, materials, transportation, labor, and other requirements.16 Low-tech costs are claiming a larger share of the high-tech solar system price tag.

Finally, unforeseen limitations are blindsiding the solar industry as it grows.17 Fire departments restrict solar roof installations and homeowner associations complain about the ugly arrays. Repair and maintenance costs remain stubbornly high. Adding to the burden, solar arrays now often require elaborate alarm systems and locking fasteners; without such protection, thieves regularly steal the valuable panels. Police departments throughout the country are increasingly reporting photovoltaic pilfering, which is incidentally inflating home insurance premiums. For instance, California resident Glenda Hoffman woke up one morning to discover thieves stole sixteen solar panels from her roof as she slept. The cost to replace the system chimed in at $95,000, an expense her insurance company covered. Nevertheless, she intends to protect the new panels herself, warning, “I have a shotgun right next to the bed and a .22 under my pillow.”18

Disconnected: Transmission and TimingSolar cells offer transmission benefits in niche applications when they supplant disposable batteries or other expensive energy supply options. For example, road crews frequently use solar cells in tandem with rechargeable battery packs to power warning lights and monitoring equipment along highways. In remote and poor equatorial regions of the world, tiny amounts of expensive solar energy can generate a sizable impact on families and their communities. Here, solar cells provide a viable alternative to candles, disposable batteries, and kerosene lanterns, which are expensive, dirty, unreliable, and dangerous.

Given the appropriate socioeconomic context, solar energy can help villages raise their standards of living. Radios enable farmers to monitor the weather and connect families with news and cultural events. Youth who grow up with evening lighting, and thus a better chance for education, are more likely to wait before getting married and have fewer, healthier children if they become parents.19 This allows the next generation of the village to grow up in more economically stable households with extra attention and resources allotted to them.

Could rich nations realize similar transmission-related benefits? Coal power plants require an expensive network of power lines and transformers to deliver their power. Locally produced solar energy may still require a transformer but it bypasses the long-distance transmission step. Evading transmission lines during high midday demand is presumably beneficial since this is precisely when fully loaded transmission lines heat up, which increases their resistance and thus wastes energy to heat production. Solar cells also generate their peak output right when users need it most, at midday on hot sunny days as air conditioners run full tilt. Electricity is worth more at these times because it is in short supply. During these periods, otherwise dormant power facilities, called peaker plants, fire up to fulfill spikes in electrical demand. Peaker plants are more expensive and less efficient than base-load plants, so avoiding their use is especially valuable. Yet analysts often evaluate and compare solar power costs against average utility rates.20 This undervalues solar’s midday advantage. Taken into account, timing benefits increase the value of solar cell output by up to 20 percent.

Transmission and timing advantages of solar electricity led the director of the University of California Energy Institute, Severin Borenstein, to find out how large these benefits are in practice. His conclusions are disheartening.

Borenstein’s research suggests that “actual installation of solar pv [photovoltaic] systems in California has not significantly reduced the cost of transmission and distribution infrastructure, and is unlikely to do so in other regions.” Why? First, most transmission infrastructure has already been built, and localized solar- generation effects are not enough to reduce that infrastructure. Even if they were, the savings would be small since solar cells alone would not shrink the breadth of the distribution network. Furthermore, California and the other thirty states with solar subsidies have not targeted investments toward easing tensions in transmission-constrained areas. Dr. Borenstein took into account the advantageous timing of solar cell output but he ultimately concludes: “The market benefits of installing the current solar pv technology, even after adjusting for its timing and transmission advantages, are calculated to be much smaller than the costs. The difference is so large that including current plausible estimates of the value of reducing greenhouse gases still does not come close to making the net social return on installing solar pv today positive.”21 In a world with limited funds, these findings don’t position solar cells well. Still, solar advocates insist the expensive panels are a necessary investment if we intend to place a stake in the future of energy.

Learning by Doing: Staking Claims on the FutureIn the 1980s Ford Motor Company executives noticed something peculiar in their sales figures. Customers were requesting cars with transmissions built in their Japanese plant instead of the American one. This puzzled engineers since both the U.S. and Japanese transmission plants built to the same blueprints and same tolerances; the transmissions should have been identical. They weren’t. When Ford engineers disassembled and analyzed the transmissions, they discovered that even though the American parts met allowable tolerances, the Japanese parts fell within an even tighter tolerance, resulting in transmissions that ran more smoothly and yielded fewer defects—an effect researchers attribute to the prevalent Japanese philosophy of Kaizen. Kaizen is a model of continuous improvement achieved through hands-on experience with a technology. After World War II, Kaizen grew in popularity, structured largely by U.S. military innovation strategies developed by W. Edwards Deming. The day Ford engineers shipped their blueprints to Japan marked the beginning of this design process, not the end. Historians of technological development point to such learning-by-doing effects when explaining numerous technological success stories. We might expect such effects to benefit the solar photovoltaic industry as well.

Indeed, there are many cases where this kind of learning by doing aids the solar industry. For instance, the California Solar Initiative solved numerous unforeseen challenges during a multiyear installation of solar systems throughout the state—unexpected and burdensome administration requirements, lengthened application processing periods, extended payment times, interconnection delays, extra warranty expenses, and challenges in metering and monitoring the systems. Taken together, these challenges spurred learning that would not have been possible without the hands-on experience of running a large-scale solar initiative.22 Solar proponents claim this kind of learning is bringing down the cost of solar cells.23 But what portion of photovoltaic price drops over the last half century resulted from learning-by- doing effects and what portion evolved from other factors?

When Gregory Nemet from the Energy and Resources Group at the University of California disentangled these factors, he found learning-by-doing innovations contributed only slightly to solar cell cost reductions over the last thirty years. His results indicate that learning from experience “only weakly explains change in the most important factors—plant size, module efficiency, and the cost of silicon.”24 In other words, while learning-by-doing effects do influence the photovoltaic manufacturing industry, they don’t appear to justify massive investments in a fabrication and distribution system just for the sake of experience.

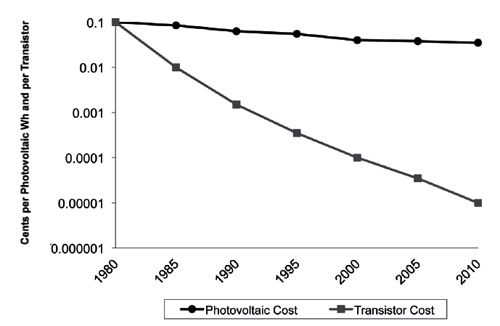

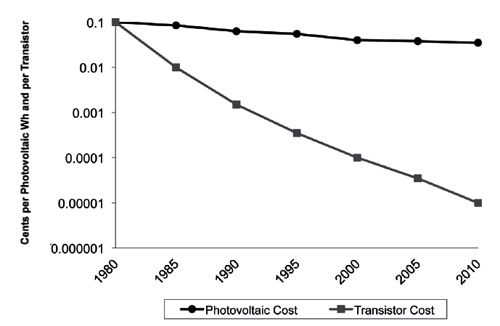

Nevertheless, there is a link that Dr. Nemet didn’t study: silicon’s association with rapid advancements in the microelectronics industry. Microchips and solar cells are both crafted from silicon, so perhaps they are both subject to Moore’s law, the expectation that the number of transistors on a microchip will double every twenty-four months. The chief executive of Nanosolar points out, “The solar industry today is like the late 1970s when mainframe computers dominated, and then Steve Jobs and IBM came out with personal computers.” The author of a New York Times article corroborates the high-tech comparison: “A link between Moore’s law and solar technology reflects the engineering reality that computer chips and solar cells have a lot in common.” You’ll find plenty of other solar proponents industriously evoking the link.25

You’ll have a difficult time finding a single physicist to agree. Squeezing more transistors onto a microchip brings better performance and subsequently lower costs, but miniaturizing and packing solar cells tightly together simply reduces their surface area exposed to the sun’s energy. Smaller is worse, not better. But size comparisons are a literal interpretation of Moore’s law. Do solar technologies follow Moore’s law in terms of cost or performance?

No and no.

Proponents don’t offer data, statistics, figures, or any other explanation beyond the comparison itself—a hit and run. Microchips, solar cells, and Long Beach all contain silicon, but their similarities end there. Certainly solar technologies will improve— there is little argument on that—but expecting them to advance at a pace even approaching that of the computer industry, as we shall see, becomes far more problematic.

Solar Energy and Greenhouse GasesPerhaps no single benefit of solar cells is more cherished than their ability to reduce CO2 emissions. And perhaps no other purported benefit stands on softer ground. To start, a group of Columbia University scholars calculated a solar cell’s lifecycle carbon footprint at twenty-two to forty-nine grams of CO2 per kilowatthour (kWh) of solar energy produced.26 This carbon impact is much lower than that of fossil fuels.27 Does this offer justification for subsidizing solar panels?

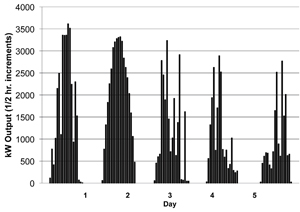

Figure 2: Solar module costs do not follow Moore’s law Despite the common reference to Moore’s law by solar proponents, three decades of data show that photovoltaic module cost reductions do not mirror cost reductions in the microelectronics industry. Note the logarithmic scale. (Data from Solarbuzz and Intel)

Figure 2: Solar module costs do not follow Moore’s law Despite the common reference to Moore’s law by solar proponents, three decades of data show that photovoltaic module cost reductions do not mirror cost reductions in the microelectronics industry. Note the logarithmic scale. (Data from Solarbuzz and Intel)We can begin by considering the market price of greenhouse gases like CO2. In Europe companies must buy vouchers to emit CO2, which trade at around twenty to forty dollars per ton. Most analysts expect American permits to stabilize on the open market somewhere below thirty dollars per ton.28 Today’s solar technologies would compete with coal only if carbon credits rose to three hundred dollars per ton. Photovoltaics could nominally compete with natural gas only if carbon offsets skyrocketed to six hundred dollars per ton.29 It is difficult to conceive of conditions that would thrust CO2 prices to such stratospheric levels in real terms. Even some of the most expensive options for dealing with CO2 would become cost competitive long before today’s solar cell technologies. If limiting CO2 is our goal, we might be better off directing our time and resources to those options first; solar cells seem a wasteful and pricey strategy.

Unfortunately, there’s more. Not only are solar cells an overpriced tool for reducing CO2 emissions, but their manufacturing process is also one of the largest emitters of hexafluoroethane (C2F6), nitrogen trifluoride (NF3), and sulfur hexafluoride (SF6). Used for cleaning plasma production equipment, these three gruesome greenhouse gases make CO2 seem harmless. As a greenhouse gas, C2F6 is twelve thousand times more potent than CO2, is 100 percent manufactured by humans, and survives ten thousand years once released into the atmosphere.30 NF3 is seventeen thousand times more virulent than CO2, and SF6, the most treacherous greenhouse gas, according to the Intergovernmental Panel on Climate Change, is twenty-five thousand times more threatening. 31 The solar photovoltaic industry is one of the leading and fastest growing emitters of these gases, which are now measurably accumulating within the earth’s atmosphere. A recent study on NF3 reports that atmospheric concentrations of the gas have been rising an alarming 11 percent per year.32

Check the Ingredients: Toxins and WasteIn the central plains of China’s Henan Province, local residents grew suspicious of trucks that routinely pulled in behind the playground of their primary school and dumped a bubbling white liquid onto the ground. Their concerns were justified. According to a Washington Post investigative article, the mysterious waste was silicon tetrachloride, a highly toxic chemical that burns human skin on contact, destroys all plant life it comes near, and violently reacts with water.33 The toxic waste was too expensive to recycle, so it was simply dumped behind the playground— daily—for over nine months by Luoyang Zhonggui High-Technology Company, a manufacturer of polysilicon for solar cells. Such cases are far from rare. A report by the Silicon Valley Toxics Coalition claims that as the solar photovoltaic industry expands,

little attention is being paid to the potential environmental and health costs of that rapid expansion. The most widely used solar PV panels have the potential to create a huge new wave of electronic waste (e-waste) at the end of their useful lives, which is estimated to be 20 to 25 years. New solar PV technologies are increasing cell efficiency and lowering costs, but many of these use extremely toxic materials or materials with unknown health and environmental risks (including new nanomaterials and processes).34

For example, sawing silicon wafers releases a dangerous dust as well as large amounts of sodium hydroxide and potassium hydroxide. Crystalline-silicon solar cell processing involves the use or release of chemicals such as phosphine, arsenic, arsine, trichloroethane, phosphorous oxychloride, ethyl vinyl acetate, silicon trioxide, stannic chloride, tantalum pentoxide, lead, hexavalent chromium, and numerous other chemical compounds. Perhaps the most dangerous chemical employed is silane, a highly explosive gas involved in hazardous incidents on a routine basis according to the industry.35 Even newer thin-film technologies employ numerous toxic substances, including cadmium, which is categorized as an extreme toxin by the U.S. Environmental Protection Agency and a Group 1 carcinogen by the International Agency for Research on Cancer. At the end of a solar panel’s usable life, its embedded chemicals and compounds can either seep into groundwater supplies if tossed in a landfill or contaminate air and waterways if incinerated.36

Are the photovoltaic industry’s secretions of heavy metals, hazardous chemical leaks, mining operation risks, and toxic wastes especially problematic today? If you ask residents of Henan Province, the answer will likely be yes. Nevertheless, when pitted against the more dangerous particulate matter and pollution from the fossil-fuel industry, the negative consequences of solar photovoltaic production don’t seem significant at all. Compared to the fossil-fuel giants, the photovoltaic industry is tiny, supplying less than a hundredth of 1 percent of America’s electricity. 37 (If the text on this page represented total U.S. power supply, the photovoltaic portion would fit inside the period at the end of this sentence.) If photovoltaic production grows, so will the associated side effects.

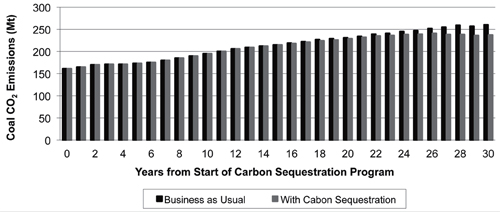

Further, as we’ll explore in future chapters, even if the United States expands solar energy capacity, this may increase coal use rather than replace it. There are far more effective ways to invest our resources, ways that will displace coal consumption—strategies that will lessen, not multiply, the various ecological consequences of energy production. Yet we have much to discuss before coming to those—most immediately, a series of surprises.

Photovoltaic Durability: A Surprise Inside Every PanelThe United Arab Emirates recently commissioned the largest cross-comparison test of photovoltaic modules to date in preparation for building an ecometropolis called Masdar City. The project’s technicians installed forty-one solar panel systems from thirty-three different manufacturers in the desert near Abu Dhabi’s international airport.38 They designed the test to differentiate between cells from various manufacturers, but once the project was initiated, it quickly drew attention to something else—the drawbacks that all of the cells shared, regardless of their manufacturer.

Solar cell firms generally test their panels in the most ideal of conditions—a Club Med of controlled environments. The realworld desert outside Masdar City proved less accommodating. Atmospheric humidity and haze reflected and dispersed the sun’s rays. Even more problematic was the dust, which technicians had to scrub off almost daily. Soiling is not always so easy to remove. Unlike Masdar’s panels, hovering just a few feet above the desert sands, many solar installations perch high atop steep roofs. Owners must tango with gravity to clean their panels or hire a stand-in to dance for them. Researchers discovered that soiling routinely cut electrical output of a San Diego site by 20 percent during the dusty summer months. In fact, according to researchers from the photovoltaic industry, soiling effects are “magnified where rainfall is absent in the peak-solar summer months, such as in California and the Southwest region of the United States,” or in other words, right where the prime real estate for solar energy lies.39

When it comes to cleanliness, solar cells are prone to the same vulnerability as clean, white dress shirts; small blotches reduce their value dramatically. Due to wiring characteristics, solar output can drop disproportionately if even tiny fragments of the array are blocked, making it essential to keep the entire surface clear of the smallest obstructions, according to manufacturers. Bird droppings, shade, leaves, traffic dust, pollution, hail, ice, and snow all induce headaches for solar cell owners as they attempt to keep the entirety of their arrays in constant contact with the sunlight that powers them. Under unfavorable circumstances, these soiling losses can climb to 80 percent in the field.40

When journalists toured Masdar’s test site, they visited the control room that provided instant energy readouts from each company’s solar array. On that late afternoon, the journalists noted that the most productive unit was pumping out four hundred watts and the least productive under two hundred. All of the units were rated at one thousand watts maximum. This peak output, however, can only theoretically occur briefly at midday, when the sun is at its brightest, and only if the panels are located within an ideal latitude strip and tilted in perfect alignment with the sun (and all other conditions are near perfect as well). The desert outside Masdar City seems like one of the few ideal locations on the planet for such perfection. Unfortunately, during the midday hours of the summer, all of the test cells became extremely hot, up to 176 degrees Fahrenheit (80°C), as they baked in the desert sun. Due to the temperature sensitivity of the photovoltaic cells, their output was markedly hobbled across the board, right at the time they should have been producing their highest output.41 So who won the solar competition in Masdar City? Perhaps nobody.

In addition to haze, humidity, soiling, misalignment, and temperature sensitivity, silicon solar cells suffer an aging effect that decreases their output by about 1 percent or more per year.42 Newer thin-film, polymer, paint, and organic solar technologies degrade even more rapidly, with some studies recording degradation of up to 50 percent within a short period of time. This limitation is regularly concealed because of the way reporters, corporations, and scientists present these technologies.43

For instance, scientists may develop a thin-film panel achieving, say, 13 percent overall efficiency in a laboratory. However, due to production limitations, the company that commercializes the panel will typically only achieve a 10 percent overall efficiency in a prototype. Under the best conditions in the field this may drop to 7–8.5 percent overall efficiency due just to degradation effects.44 Still, the direct current (dc) output is not usable in a household until it is transformed. Electrical inverters transform the dc output of solar cells into the higher voltage and oscillating ac that appliances and lights require. Inverters are 70–95 percent efficient, depending on the model and loading characteristics. As we have seen, other situational factors drag performance down even further. Still, when laboratory scientists and corporate pr teams write press releases, they report the more favorable figure, in this case 13 percent. Journalists at even the most esteemed publications will often simply transpose this figure into their articles. Engineers, policy analysts, economists, and others in turn transpose the figure into their assessments.

Illustration 1: Solar system challenges The J. F. Williams Federal Building in Boston was one of the earliest Million Solar Roofs sites and the largest building-integrated array on the East Coast. As with most integrated systems, the solar cells do not align with the sun, greatly reducing their performance. In 2001 technicians replaced the entire array after a system malfunction involving electrical arcing, water infiltration, and broken glass. The new array has experienced system-wide aging degradation as well as localized corrosion, delamination, water infiltration, and sudden module failures. (Photo by Roman Piaskoski, courtesy of the U.S. Department of Energy)

Illustration 1: Solar system challenges The J. F. Williams Federal Building in Boston was one of the earliest Million Solar Roofs sites and the largest building-integrated array on the East Coast. As with most integrated systems, the solar cells do not align with the sun, greatly reducing their performance. In 2001 technicians replaced the entire array after a system malfunction involving electrical arcing, water infiltration, and broken glass. The new array has experienced system-wide aging degradation as well as localized corrosion, delamination, water infiltration, and sudden module failures. (Photo by Roman Piaskoski, courtesy of the U.S. Department of Energy)With such high expectations welling up around solar photovoltaics, it is no wonder that newbie solar cell owners are often shocked by the underwhelming performance of their solar arrays in the real world. For example, roof jobs may require that they disconnect, remove, and reinstall their rooftop arrays. Yet an even larger surprise awaits them—within about five to ten years, their solar system will abruptly stop producing power. Why? Because a key component of the solar system, the electrical inverter, will eventually fail. While the solar cells themselves can survive for twenty to thirty years, the associated circuitry does not. Inverters for a typical ten-kilowatt solar system last about five to eight years and therefore owners must replace them two to five times during the productive life of a solar photovoltaic system. Fortunately, just about any licensed electrician can easily swap one out. Unfortunately, they cost about eight thousand dollars each.45

Free Panels, Anyone?Among the CEOs and chief scientists in the solar industry, there is surprisingly little argument that solar systems are expensive.46 Even an extreme drop in the price of polysilicon, the most expensive technical component, would do little to make solar cells more competitive. Peter Nieh, managing director of Lightspeed Venture Partners, a multibillion-dollar venture capital firm in Silicon Valley, contends that cheaper polysilicon won’t reduce the overall cost of solar arrays much, even if the price of the expensive material dropped to zero.47 Why? Because the cost of other materials such as copper, glass, plastics, and aluminum, as well as the costs for fabrication and installation, represent the bulk of a solar system’s overall price tag. The technical polysilicon represents only about a fifth of the total.

Furthermore, Keith Barnham, an avid solar proponent and senior researcher at Imperial College London, admits that unless efficiency levels are high, “even a zero cell cost is not competitive.” 48 In other words, even if someone were to offer you solar cells for free, you might be better off turning the offer down than paying to install, connect, clean, insure, maintain, and eventually dispose of the modules—especially if you live outside the remote, dry, sunny patches of the planet such as the desert extending from southeast California to western Arizona. In fact, the unanticipated costs, performance variables, and maintenance obligations for photovoltaics, too often ignored by giddy proponents of the technology, can swell to unsustainable magnitudes. Occasionally buyers decommission their arrays within the first decade, leaving behind graveyards of toxic panels teetering above their roofs as epitaphs to a fallen dream. Premature decommissioning may help explain why American photovoltaic electrical generation dropped during the last economic crisis even as purported solar capacity expanded.49 Curiously, while numerous journalists reported on solar infrastructure expansion during this period, I was unable to locate a single article covering the contemporaneous drop in the nation’s solar electrical output, which the Department of Energy quietly slid into its annual statistics without a peep.

The Five Harms of Solar PhotovoltaicsAre solar cells truly such a waste of money and resources? Is it really possible that today’s solar technologies could be so ineffectual? Could they even be harmful to society and the environment?

It would be egotistically convenient if we could dismiss the costs, side effects, and limitations of solar photovoltaics as the blog-tastic hyperbole of a few shifty hacks. But we can’t. These are the limitations of solar cells as directly reported from the very CEOs, investors, and researchers most closely involved with their real-world application. The side effects and limitations collected here, while cataclysmically shocking to students, activists, business people, and many other individuals I meet, scarcely raise an eyebrow among those in the solar industry who are most intimately familiar with these technologies.

Few technicians would claim that their cells perform as well in the field as they do under the strict controls of a testing laboratory; few electricians would deny that inverters need to be replaced regularly; few energy statisticians would argue that solar arrays have much of an impact on global fossil-fuel consumption; few economists would insist we could afford to power the planet with existing solar technologies. In fact, the shortcomings reported in these pages have become remarkably stable facts within the communities of scientists, engineers, and other experts who work on solar energy. But because of specialization and occupational silo effects, few of these professionals capture the entire picture at once, leaving us too often with disjointed accounts rather than an all-inclusive rendering of the solar landscape.

Collected and assembled into one narrative, the costs, side effects, and limitations of solar photovoltaics become particularly worrisome, especially within the context of our current national finances and limited resources for environmental investments. The point is not to label competing claims about solar cells as simply true or false (we have seen they are a bit of both), but to determine if these claims have manifested themselves in ways and to degrees that validate solar photovoltaics as an appropriate means to achieve our environmental goals.

I’d like to consider some alternate readings of solar cells that are a bit provocative, perhaps even strident. What if we can’t simply roll our eyes at solar cells, shrugging them off as the harmless fascination of a few silver-haired hippies retired to the desert? What if we interpret the powerful symbolism of solar cells as metastasizing in the minds of thoughtful people into a form that is disruptive and detrimental?

First, we could read these technologies as lucrative forms of misdirection—shiny sleights of hand that allow oil companies, for example, to convince motorists that the sparkling arrays atop filling stations somehow make the liquid they pump into their cars less toxic. The fact that some people now see oil companies that also produce solar cells as “cleaner” and “greener” is a testament to a magic trick that has been well performed. Politicians have proven equally adept in such trickery, holding up solar cells to boost their poll numbers in one hand while using the other to palm legislation protecting the interests of status quo industries.

Second, could the glare from solar arrays blind us to better alternatives? If solar cells are seen as the answer, why bother with less sexy options? Many homeowners are keen on upgrading to solar, but because the panels require large swaths of unobstructed exposure to sunlight, solar cells often end up atop large homes sitting on widely spaced lots cleared of surrounding trees, which could have offered considerable passive solar benefits. In this respect, solar cells act to “greenwash” a mode of suburban residential construction, with its car-dependent character, that is hardly worthy of our explicit praise. Sadly, as we shall see ahead, streams of money, resources, and time are diverted away from less visible but more durable solutions in order to irrigate the infertile fields of solar photovoltaics.

Third, might the promise of solar cells act to prop up a productivist mentality, one that insists that we can simply generate more and more power to satisfy our escalating cravings for energy? If clean energy is in the pipeline, there is less motivation to use energy more effectively and responsibly.50

Fourth, we could view solar photovoltaic subsidies as perverse misallocations of taxpayer dollars. For instance, the swanky Honig Winery in Napa Valley chopped down some of its vines to install $1.2 million worth of solar cells. The region’s utility customers paid $400,000 of the tab. The rest of us paid another 30 percent through federal rebates. The 2005 federal energy bill delivered tax write-offs of another 30 percent. Luckily, we can at least visit the winery to taste the resulting vintages, but even though you’re helping pay their electric bill, they’ll still charge you ten dollars for the privilege. Honig is just one of several dozen wineries to take advantage of these government handouts of your money, and wineries represent just a handful of the thousands of industries and mostly wealthy households that have done the same.

Fifth, photovoltaic processes—from mineral exploration to fabrication, delivery, maintenance, and disposal—generate their own environmental side effects. Throughout the photovoltaic lifecycle, as we have reviewed, scientists are discovering the same types of short- and long-term harms that environmentalists have historically rallied against.

Finally, it is worth acknowledging that there are a few places where solar cells can generate an impact today, but for the most part, it’s not here. Plopping solar cells atop houses in the well-trimmed suburbs of America and under the cloudy skies of Germany seems an embarrassing waste of human energy, money, and time. If we’re searching for meaningful solar cell applications today, we’d better look to empowering remote communities whose only other options are sooty candles or expensive disposable batteries—not toward haplessly supplementing power for the wine chillers, air conditioners, and clothes dryers of industrialized nations. We environmentalists have to consider whether it’s reasonable to spend escalating sums of cash to install primitive solar technologies when we could instead fund preconditions that might someday make them viable solutions for a greater proportion of the populace.

Charting a New Solar StrategyCurrent solar photovoltaic technologies are ineffective at preventing greenhouse gas emissions and pollution, which is especially disconcerting considering how rapidly their high costs suck money away from more potent alternatives. To put this extreme waste of money into perspective, it would be terrifically more cost effective to patch the leaky windows of a house with gold leaf rather than install solar cells on its roof. Would we support a government program to insulate people’s homes using gold? Of course not. Anyone could identify the absurd profligacy of such a scheme (in part, because we have not been repeatedly instructed since childhood that it is a virtuous undertaking).

Any number of conventional energy strategies promise higher dividends than solar cell investments. If utilities care to reduce CO2, then for just 10 percent of the Million Solar Roofs Program cost, they could avoid twice the greenhouse gas emissions by simply converting one large coal-burning power plant over to natural gas. If toxicity is a concern, legislators could direct the money toward low-tech solar strategies such as solar water heating, which has a proven track record of success. Or for no net cost at all, we could support strategies to bring our homes and commercial buildings into sync with the sun’s energy rather than working against it. A house with windows, rooflines, and walls designed to soak up or deflect the sun’s energy in a passive way, will continue to do so unassumingly for generations, even centuries. Fragile solar photovoltaic arrays, on the other hand, are sensitive to high temperatures, oblige owners to perform constant maintenance, and require extremely expensive components to keep them going for their comparably short lifespan.

Given the more potent and viable alternatives, it’s difficult to see why federal, state, and local photovoltaic subsidies, including the $3.3 billion solar roof initiative in California, should not be quickly scaled down and eliminated as soon as practicable. It is hard to conceive of a justification for extracting taxes from the working class to fund installations of Stone Age photovoltaic technologies high in the gold-rimmed suburbs of Arizona and California.

The solar establishment will most certainly balk at these observations, quibble about the particulars, and reiterate the benefits of learning by doing and economies of scale. These, however, are tired arguments. Based on experiences in California, Japan, and Europe, we now have solid field data indicating that (1) the benefits of solar cells are insignificant compared to the expense of realizing them, (2) the risks and limitations are substantial, and (3) the solar forecast isn’t as sunny as we’ve been led to believe.

Considering the extreme risks and limitations of today’s solar technologies, the notion that they could create any sort of challenge to the fossil-fuel establishment starts to appear not merely optimistic, but delusional. It’s like believing that new parasail designs could form a challenge to the commercial airline industry. Perhaps the only way we could believe such an outlandish thought is if we are told it over, and over, and over again. In part, this is what has happened. Since we were children, we’ve been promised by educators, parents, environmental groups, journalists, and television reporters that solar photovoltaics will have a meaningful impact on our energy system. The only difference today is that these fairy tales come funded through high-priced political campaigns and the advertising budgets of BP, Shell, Walmart, Whole Foods, and numerous other corporations.

Solar cells shine brightly within the idealism of textbooks and the glossy pages of environmental magazines, but real-world experiences reveal a scattered collection of side effects and limitations that rarely mature into attractive realities. There are many routes to a more durable, just, and prosperous energy system, but the glitzy path carved out by today’s archaic solar cells doesn’t appear to be one of them.